The proper use of technology enables businesses to be more efficient. Our recent research into

technology for business innovation found

that 56 percent indicate innovative technology is very important, yet

only 9 percent are very satisfied with theirs, showing plenty of room

for improvement. As we enter 2013, businesses have more choices than

ever for technology to improve business and IT. Our firm has identified

six key technologies that give organizations significant competitive

advantages: big data, business analytics, business and social

collaboration, cloud computing, mobile technology and social media. Our

research agenda for 2013 is designed to help organizations assess and

analyze these technologies and make the best possible decisions.

Big Data

Big data helps business and IT organizations manage and use information.

Our technology innovation research finds

only 14 percent of businesses today are very satisfied with their

existing big data technology. At the same time, organizations that

utilize big data effectively

have improved their business significantly, according to 28 percent of

organizations. Our research in 2013 will build on

our assessment of big data in 2012.

We will do a benchmark research study on big data analytics and another

on information optimization. We see that organizations are investing in

information assets that require big data and the analytics associated

with it to refine information and optimize business activities. Big data

can have significant business value, but using it requires that IT

coordinate with business on the benefits they can achieve. Making a

business case for an investment in big data technology can help

organizations address the top issue of it being too expensive, as found

in almost half (44%) of organizations. At the same time, organizations

need to ensure they have the competencies to meet big data needs, which

include not just the technology for storage and access but also

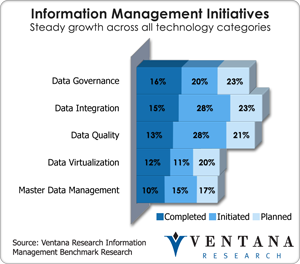

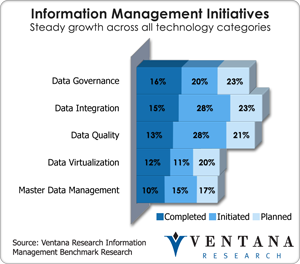

underlying information management issues such as data governance, data

integration and data quality, which

our research in 2012 found are still in embryonic form in most organizations.

Business Analytics

Our recent research on technology innovation found business analytics

to be the top-ranked priority, and very important to more than half

(52%) of organizations. Business analytics is not just a technology to

get metrics faster but a set of processes to operate smarter with

information, to better visualize and apply advanced methods

such as predictive analytics, and to identify ways to better search and

present information for a broad range of business constituents. Our

research finds only half (51%) of organizations are satisfied with their

existing processes due to lack of skilled resources. More than half of

organizations say analytics are too hard to build and maintain or data

is not readily available. The most time-consuming aspects of the process

are data-related ones, according to 44 percent of organizations. The

technology too still has room to improve, with only 20 percent being

very satisfied. The most critical capabilities are in the areas of

predictive analytics (49%), visual discovery (48%) and taking action on

the outcomes of the analytics (46%). We also found in

our next-generation business intelligence research that

the use of mobile technologies such as tablets is growing across

organizations. We also found in our research a high priority to use

social collaboration technology with business analytics to work together

on making improvements in shorter period of time than traditional email

or phone calls. Our research agenda in 2013 will investigate big data

analytics and next-generation business analytics approaches building on

top of

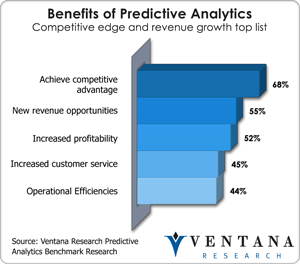

our research on predictive analytics,

which found that organizations still struggle to integrate predictive

analytics with information architectures to support analysts and data

scientists. Advancing the competencies and focusing on analytics are

critical processes, but businesses also need simpler communication of

results to help those responsible understand situations and consider

potential recommendation actions. We hope that technology suppliers will

work to better align to the human dynamics of what really happens with

analytics to better support communicating observations and insights to

ensure that end goals are achieved.

Business and Social Collaboration

The

revolution in social media has expanded into business, bringing with it

social collaboration and helping business processes by connecting

people to achieve goals personally, departmentally or across an

organization. This new technology was ranked the second most important

technology in our research, but only 17 percent of organizations are

very confident in their ability to use the technology well. With most

organizations (86%) using shared folders and documents, it should be no

surprise that part of the issue is related to technology. Organizations

are evaluating new methods such as wall posting (45%), social

recognition (41%), earning badges and awards (40%) and broadcast or

Twitter-like capabilities (39%). With only a quarter of organizations

being satisfied with their existing approaches, we see a lot of changes

coming in 2013 in regards to the technologies selected and deployed. Our

research in 2013 will examine where collaboration is critical in areas

of human capital management, sales, customer engagement and even

finance. Building on top of some groundbreaking research across business

and vertical industries, we see business advancing rapidly with or

without IT support, since business and social collaboration can be

easily onboarded through the use of cloud computing. Unfortunately

organizations are mixed in the methods they prefer to use to access

collaboration – embedded in applications, through Microsoft Office,

embedded within tools like business intelligence or stand-alone – making

it complex to have consistency for users and their interactions. Using

social collaboration with business analytics is a growing priority and

organizations will need to assess their technologies to see if they meet

this need. We believe that social collaboration will help bridge

generational divides between workers as it becomes more easily

accessible through web and mobile technologies, allowing managers and

workers to engage anytime or anyplace. Focusing on the benefits of

social collaboration, such as knowledge sharing, is critical as

our research finds as the top need in 49 percent of organizations.

Cloud Computing

Our research finds that businesses don’t see cloud computing as

innovative technology but rather as a utility and becoming a standard

method that can be easily accessed and leveraged as part of their

portfolio of computing options. These faster methods to onboard

applications have become easy and in most cases require little IT

involvement.

But beyond the simplicity for business and potential chaos for IT to

eventually govern and support, the cloud computing environment is now a

viable platform for IT to leverage in a multitude of methods, from IT

infrastructure to developing and operating applications. The cloud

computing environment can be used as a central point for integrating

data and storing it for the enterprise or for customers and suppliers,

but most organizations have not automated the integration of data to

support business processes or business analytics and decision support.

The lack of automation has increased concerns for data security, which

63 percent of organizations in

our data in the cloud research find

to be a major concern. In all of our research in 2012, the preference

for cloud computing is growing across lines of business and especially

in areas like sales, customer service and human capital management. In

2013 we plan to further assess the advancements in cloud computing, from

big data and analytics to information that can be leveraged from a

broad range of applications and services.

Mobile Technology

The use of smartphones and tablets has become common among consumers

who are also workers in organizations. Mobile technology is a new

platform on which organizations can deploy applications and tools for a

wide array of business needs. Yet

our next-generation workforce

management research finds only 8 percent of organizations indicate they

have everything they need on these technologies, and only a quarter

more indicate they have most but not all they need available, which

leaves a large number of organizations not able to meet their mobile

business needs. This might be why only 20 percent are very confident in

their use of mobile technology today. The debate on whether to use

native applications and tools or operate across a web browser

environment still looms, with native (39%) outpacing browser (33%) and a

fifth having no preference. Bring your own device (BYOD) is another

area of friction, where 39 percent of organizations allow this approach

with smartphones and 45 percent with tablets. Organizations have many

opportunities to determine how to use mobile technology effectively, and

can derive many benefits. Our next-generation business intelligence

research found increased workforce productivity was at the top of the

list in 55 percent of organizations. Our research in 2013 will further

investigate the use of tablets across the line of business, since this

was found to have the largest growth planned (34%), while smartphones

are more established.

Social Media

Social media is a new path for organizations to use to expand their

corporate footprint to a broader audience and to gain brand awareness by

marketing products and services. This new channel of opportunity

enables organizations to rethink how they operate many of their business

processes, including the ones that they use to find new talent and track candidates into an organization. In 2012

our research into social media and recruiting found

that only 7 percent of organizations are very confident in use of this

channel, but half of organizations are planning to change how they use

social media over the next year; for instance, 81 percent of

organizations have identified it as a method to identify new talent

pools. In 2013 we will continue to examine best practices and benefits

of investments in this channel. We will also assess social media as a

new channel for customers to engage with organizations through a new

benchmark in

next-generation customer engagement.

Our research in 2012 found organizations benefit from using this

channel to handle a broad range of customer questions and issues.

While new technologies can help business innovate, what’s old is

still new, and requires a foundation of skills and resources. For

example, with big data, those organizations that have information

management competencies to automate big data efforts will find

themselves further ahead, as they leverage the core skills of data

integration to handle more data environments. Those organizations that

use business and social collaboration to connect people and processes

more efficiently than conference calls and email will better leverage

their human capital investments.

At the same time new techniques can make it simpler to gain value

from existing technology investments, such as advancements in the use of

text to present analytics in a readable form, new methods to use

visualization as a discovery tool on analytics, and the ability to

engage employees by using new and more social collaborative methods.

Taking advantage of this technology requires smart use of best

practices, leadership from the top and agreement about the desired

outcome. Organizations need to have technology in place to develop a

business case with balanced evaluation criteria that are not about the

vision a vendor has but rather about what the vendor can provide to

advance business efforts. We use this practical approach in our vendor

and product assessment methodology and rating

called the Value Index which we will be assessing over 100 vendors in 2013.

Organizations

should explore resources in the company to determine if necessary skill

sets exist, since their lack is the top barrier to adoption of

technology according to 51 percent of organizations as

found in our research.

Part of this process is ability to know whether the technology can

adapt to the workers’ needs and capabilities, and whether it requires

weeks of training. Organizations should also look to the future and

examine how to use cloud computing to rent technology, and how to use

mobile technology to enhance collaboration. They should also keep pace

with peers and competitors through the use of benchmarks and industry

comparisons.

You can depend on Ventana Research to provide sound facts and

pragmatic guidance to help you leverage technology to gain a competitive

advantage in your business and innovate in your processes and with your

workforce.

Original Source http://marksmith.ventanaresearch.com/2013/01/29/technology-innovation-in-2013-is-a-business-and-it-priority/

Editor’s

note: The following announcement from the Department of Energy provides

context on a potentially very virtuous use of advanced data analysis

capabilities to help solve real-world challenges regarding energy costs

in the US. Before you get too excited about the potential here, remember

to read the details. As you dig deeper into the list of projects you

will note some potential for continued government-contractor goofiness

and waste, like giving $600k to SRI to have them develop new software to

read scientific publications. Seems like they could just buy some basic

discovery tools for that or if they wanted to really use advanced big

data tools could start by leveraging the Apache Hadoop framework first.

So read the below but remember, the devil is in the details- bg

Editor’s

note: The following announcement from the Department of Energy provides

context on a potentially very virtuous use of advanced data analysis

capabilities to help solve real-world challenges regarding energy costs

in the US. Before you get too excited about the potential here, remember

to read the details. As you dig deeper into the list of projects you

will note some potential for continued government-contractor goofiness

and waste, like giving $600k to SRI to have them develop new software to

read scientific publications. Seems like they could just buy some basic

discovery tools for that or if they wanted to really use advanced big

data tools could start by leveraging the Apache Hadoop framework first.

So read the below but remember, the devil is in the details- bg

Could it really be that pie charts might not be as

Could it really be that pie charts might not be as